[ad_1]

SmartDV Technologies introduced the first HBM3 memory controller, which is available for license from developers of various on-chip systems. Apparently, HBM3 memory can offer twice the bandwidth compared to HBM2E, as well as single-chip capacity of up to 64GB, providing a reserve for the future for many years to come.

The first HBM3 controller

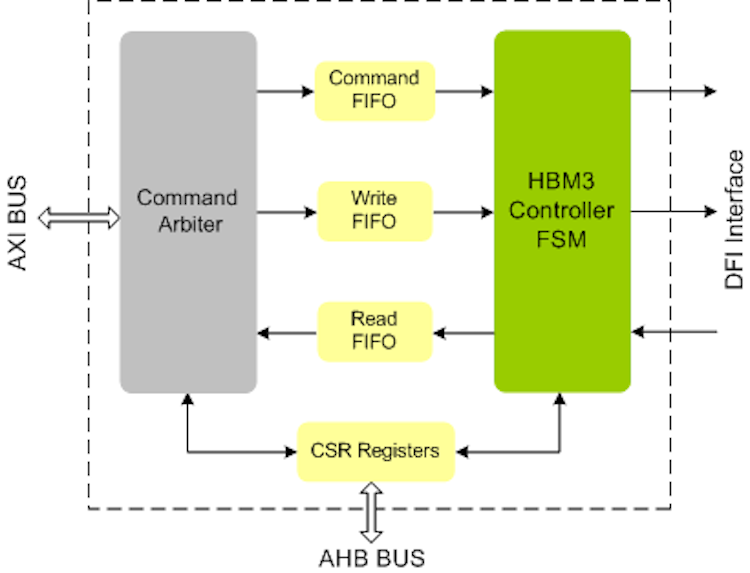

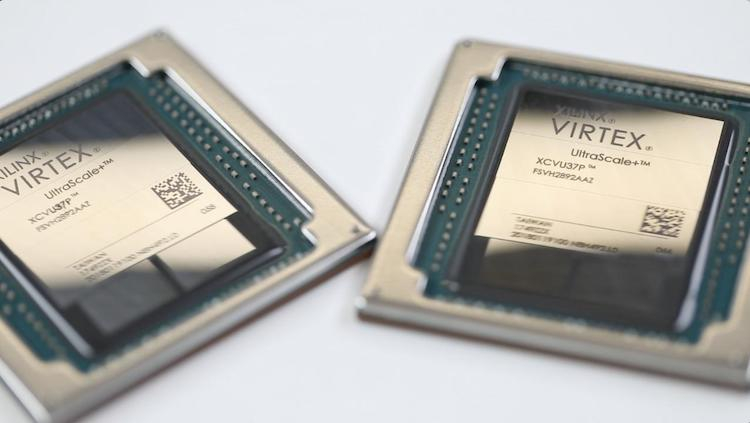

To date, the JEDEC committee has approved a draft of HBM3. Formally, the new specification has not yet been approved, but draft version 0.7 is generally called the Full Draft, which supports all the features of the standard and defines all the electrical features of the new technology. As a result, various developers can start designing their drivers and technologies to verify the implementation of these drivers. SmartDV Technologies was the first company to introduce the HBM3 controller, which can be licensed by chip designers. Controller performance was confirmed using Programmable Arrays (FPGA). At the same time, those who wish to verify the proper functioning of the final SoC can use the appropriate verification intellectual property of Cadence or SmartDV.

SmartDV’s HBM3 controller can connect to almost any processor that uses both standard (AMBA APB / AHB / AXI, VCI, OCP, Avalon, PLB, Tilelink, Wishbone), as well as proprietary intra-chip connections. The controller supports up to 16 AXI ports, DFI 4.0 / 5.0 interfaces, a 512-bit data bus, Error Correction (ECC), pseudo channels, as well as other technologies that are familiar to us from HBM2 / HBM2E.

The presence of a licensed HBM3 controller enables on-chip system developers to add support for this technology in SoC, which will appear on the market in one and a half to two years.

HBM2 / HBM2E: up to 24 GB, up to 410 GB / s

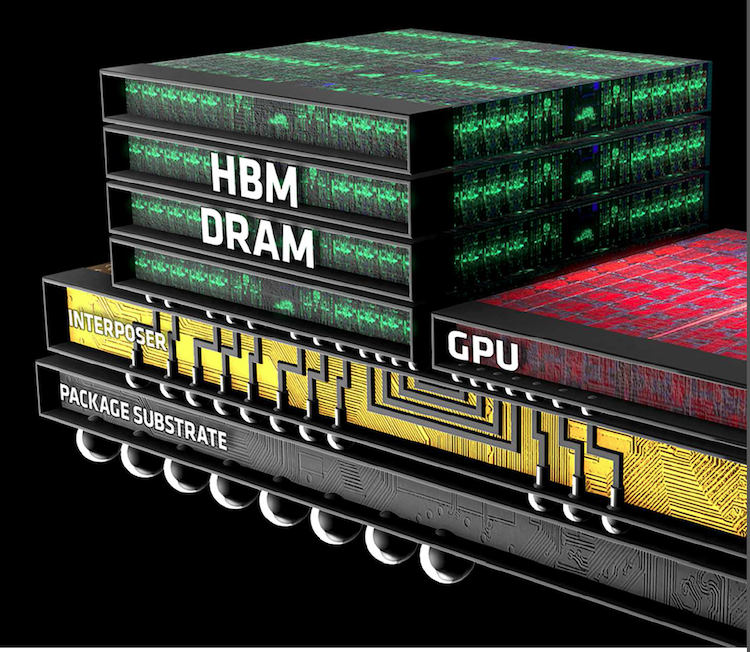

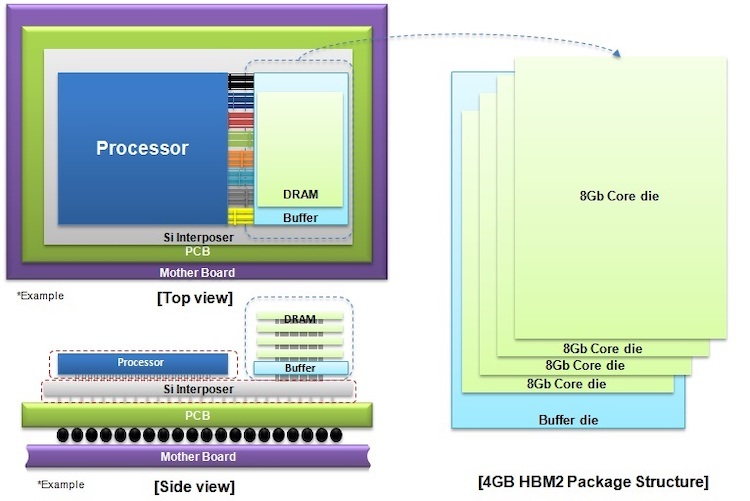

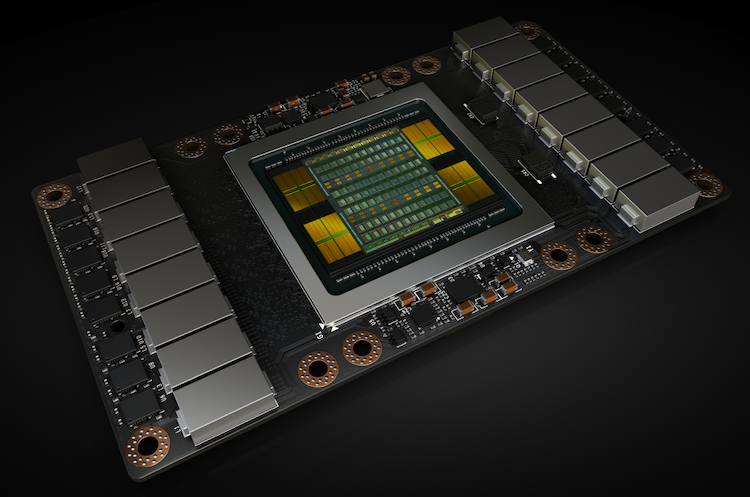

The multi-layer memory chips of the HBM / HBM2 type are based on various DRAM devices interconnected by thousands of TSV interconnects (via silicon), which are installed in the base / buffer logic matrix core, which coordinates their work. Each HBM / HBM2 chip is connected to the memory controller using a 1024-bit bus (which itself is divided into eight 128-bit channels), which is implemented in a silicon interleaver.

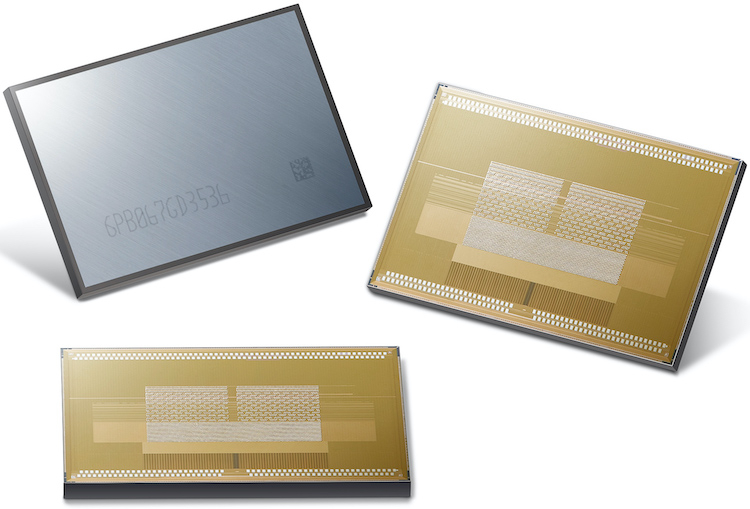

This architecture allows you to obtain the highest memory bandwidth. For example, the Samsung Flashbolt chip with eight DRAM devices, a 1024-bit bus, and a data transfer rate of 3200 Mtransfers / s offers a bandwidth of 410 GB / s, and four of these devices: 1.64 TB / s (in comparison, the memory bandwidth of NVIDIA GeForce Titan RTX – 672 GB / s). HBM3 goes further.

HBM3: up to 64GB, up to 819.2GB / s

Since the HBM3 standard has not yet been released by JEDEC, we can only judge the possibilities of a new type of memory very superficially.

Judging from the Cadence data, the developers of HBM3 set the task of increasing the number of memory devices in the assembly to 16, and the data transfer rate to 6400 Mtransfers / s due to the duplicate burst length parameter, a BL = 8. Therefore, the advanced HBM3 chip can offer a capacity of 64 GB and a bandwidth of 819.2 GB / s. It’s worth noting that the SmartDV HBM3 controller supports up to 1GB of memory per 128-bit channel.

In terms of operating modes, HBM3 will not be much different from HBM2E, so the introduction of new memory does not promise to be difficult.

Ask price?

As HBM2 practice shows, TSV interconnects are extremely difficult to manufacture, the core is not easy to connect to memory devices, and the connection substrate is very expensive.

In the case of HBM3, it is proposed to increase the number of memory devices, which will increase the number of TSV connections and complicate the chip structure. On top of everything, the basic logic itself will get more complicated. At the same time, 16 memory chips will produce a lot of thermal energy, which will not simplify the cooling of the whole set. As a general rule, the complication leads to an increase in price, which means that the cost of HBM3-based end products promises to be higher compared to HBM2E-based products.

Apparently, HBM3 was primarily designed for various specialized accelerators and complex multi-chip systems that require high memory bandwidth and are not critical to cost. Only time will tell if the world will see HBM3-based consumer solutions, but this is unlikely to happen in the foreseeable future.

Speaking about the timing of HBM3’s appearance, it’s worth remembering that the new standard is not formally ready yet, and several companies are working on devices that will use HBM2E. Be that as it may, before the massive use of HBM3 is still a long way off.

If you notice an error, select it with the mouse and press CTRL + ENTER.

[ad_2]