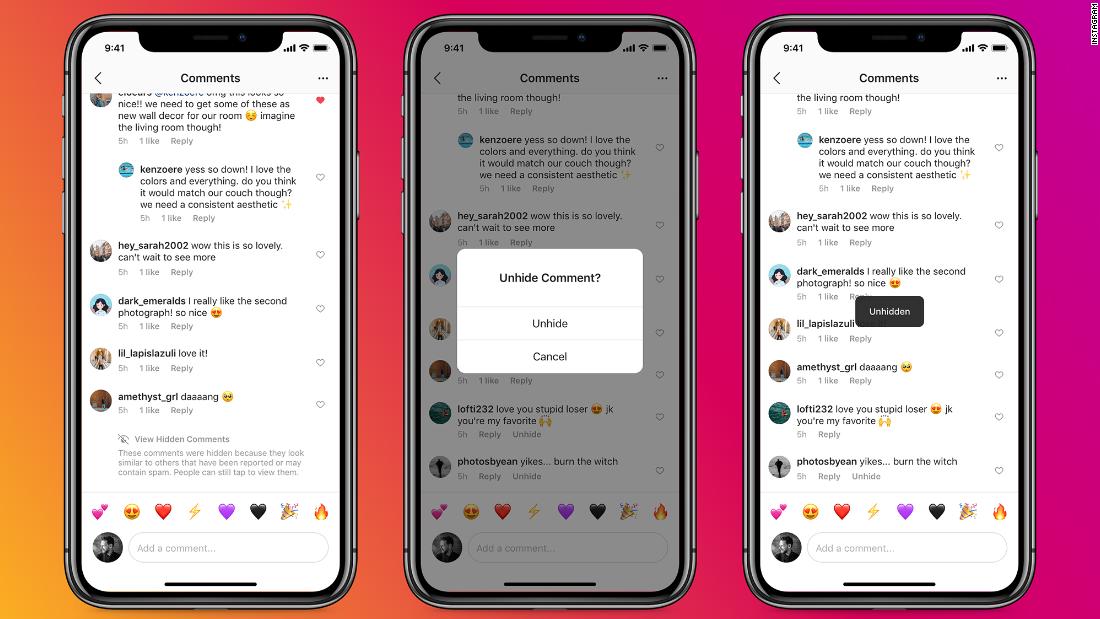

The company said the comments that will be hidden will be similar to what has been reported by users in the past. Instagram said it uses existing artificial intelligence systems to identify bullying or harassing language in comments.

Instagram announced on Tuesday that it would test the feature. This day also marks the tenth birthday of the app.

Users will still be able to tap “View Hidden Comments” to see those comments.

Instagram said that after issuing comment warnings, it has seen a “significant improvement” in whether or not to edit the comment, although it does not elaborate further.

On Tuesday, Instagram also said it was adding an extra warning to people who posted too many comments that could be offensive. The notification will ask them to return to their comment, otherwise they risk consequences such as their comment being hidden or their account being deleted.

.