[ad_1]

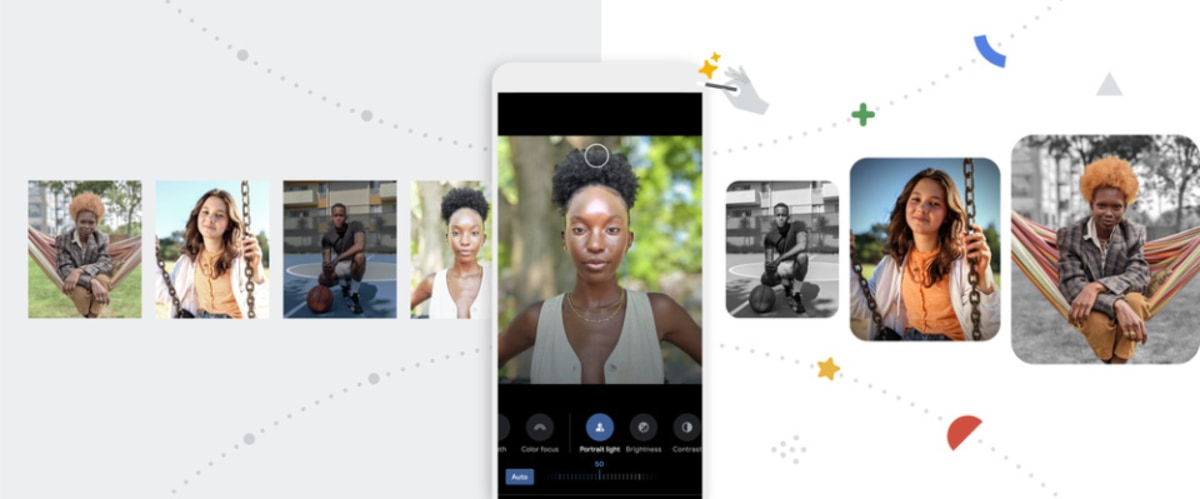

Google’s Portrait Light feature can make some of your mediocre photos look much better by giving you a way to change the direction and intensity of the lighting. The tech giant launched the AI-based lighting feature in September for the Pixel 4a 5G and Pixel 5 before giving access to older Pixel phones. Now, Google has published a post on its AI blog that explains the technology behind Portrait Light, including how it trained its machine learning models.

In order to train one of those models to add lighting to a photo from a certain direction, Google needed millions of portraits with and without additional lighting from different directions. The company used a spherical lighting rig with 64 cameras and 331 individually programmable LED light sources to capture the photos it needed. He photographed 70 people with different skin tones, face shapes, genders, hairstyles, and even clothing and accessories, illuminating them within the sphere one light at a time. The company also trained a model to determine the best lighting profile for automatic light placement. His post has all the technical details, if you want to know how the feature came about.