[ad_1]

Speaking at the Sight Tech Global conference on Thursday, Apple executives Chris Fleizach and Sarah Herrlinger delved into the company’s efforts to make its products accessible to users with disabilities.

In a virtual interview conducted by TechCrunch’s Matthew Panzarino, Fleizach, and Herrlinger detailed the origins of accessibility at Apple, where it is today, and what users can expect in the future.

Fleizach, who serves as Apple’s Accessibility Engineering Lead for iOS, provided background on how accessibility options landed on the company’s mobile platform. Going back to the original iPhone, which lacked many of the accessibility features that users trusted, he said the Mac VoiceOver team was only granted access to the product after it shipped.

“And we saw the device come out and we started thinking, we can probably make it accessible,” Fleizach said. “We were lucky enough to get in very early, I mean the project was very secret until it was shipped, very soon, right after it was shipped we were able to get involved and start prototyping.”

The effort to get to the iPhone with iOS 3 in 2009 took about three years.

Fleizach also suggests that the VoiceOver for iPhone project gained traction after a chance encounter with the late co-founder Steve Jobs. By his account, Fleizach was having lunch (presumably on the Apple campus) with a friend who uses VoiceOver for Mac, and Jobs was sitting nearby. Jobs reached out to discuss the technology, at which point a friend of Fleizach’s asked if it might be available on the iPhone. “Maybe we can do that,” Jobs said, according to Fleizach.

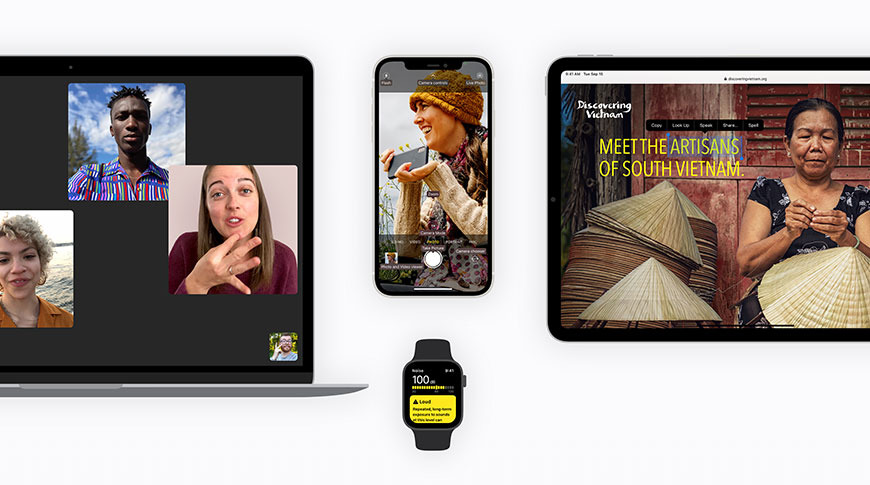

From its humble beginnings, accessibility in iOS has morphed into a feature set that includes technology like Assistive Touch, hearing aids, audio selections, dictation, sound recognition, and more. The latest iPhone 12 Pro adds LiDAR to the mix for people detection.

Herrlinger, Apple’s senior director of global accessibility policies and initiatives, says the accessibility team now joins early on a variety of projects.

“In fact, we joined these projects very early,” he said. “I think while other teams are thinking about their use cases, maybe for a wider audience, they are talking to us about the kinds of things they are doing so we can start to imagine what we could do with them from an accessibility perspective “.

Recent features such as VoiceOver Recognition and Screen Recognition are built on cutting edge innovations related to machine learning. The latest iOS devices incorporate A-series chips with Apple’s Neural Engine, a dedicated neural network module specifically designed for ML calculations.

VoiceOver Recognition is an example of a new ML-based functionality. With the tool, iOS can not only describe the content displayed on the screen, it does so with context. For example, instead of saying that a scene includes a “dog, pool, and ball,” VoiceOver Recognition is able to intelligently analyze those subjects into a “dog jumping over a pool to find a ball.”

Apple is only scratching the surface with ML. As Panzarino pointed out, a recently posted video shows software that apparently takes VoiceOver Recognition and applies it to the iPhone’s camera to provide a near-real-time overview of the world. The experiment illustrates what might be available to iOS users in the coming years.

“We want to keep developing more and more features and make those features work together,” Herrlinger said. “So whatever combination you need to be most effective using your device, that’s our goal.”

The full interview was uploaded to YouTube and can be viewed below: