[ad_1]

With a combination of radar and LiDAR now present in the iPad Pro and iPhone 12 Pro, “Apple Glass” could detect the surroundings around the user when the light is too low for them to see clearly.

Little by little we’ve been learning what Apple plans for the LiDAR sensor on devices like the iPhone 12 Pro, which uses it to help with autofocus. However, that focus aid is particularly useful in low-light environments, and Apple has plans to exploit that ability to help users of “Apple Glass.”

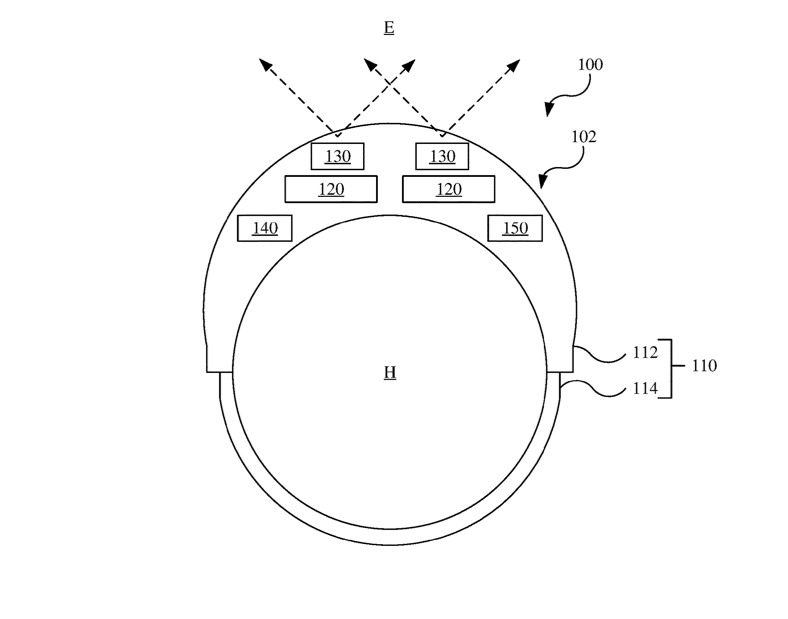

“Head-mounted display with low light operation” is a recently disclosed patent application that describes multiple ways of sensing the environment around the user of a head-mounted display (HMD).

“Human eyes have different sensitivities in different lighting conditions,” Apple begins. It then details many different forms of human vision, from photopic to mesopic, where different types or amounts of light depend on different “cone cells of the eye.”

Photopic vision is described as the functioning of the eye during “high levels of ambient light … like daylight.” Then Apple cautions that mesopic vision or other forms of vision perform poorly in comparison.

“Compared to photopic vision, [these] it can result in a loss of color vision, changes in sensitivity to different wavelengths of light, reduced acuity and more motion blur, “says Apple.” Therefore, in low light conditions, such as when relying on scotopic vision, a person has to see the environment than in good lighting conditions. ”

The solution proposed by Apple uses sensors in an HMD, such as “Apple Glass”, which records the surrounding environment. The results are then transmitted to the user in unspecified “graphic content”.

The key to recording the environment is the ability to detect distances between objects, the ability to detect depth. “The depth sensor detects the environment and, in particular, it detects the depth (for example, the distance) from the environment to objects in the environment,” says Apple.

A head-mounted display could include radar and LiDAR sensors

“The depth sensor generally includes an illuminator and a detector,” he continues. “The illuminator emits electromagnetic radiation (for example, infrared light) … into the environment. The detector observes the electromagnetic radiation reflected off objects in the environment.”

Apple is careful not to limit the possible detectors or methods that its patent application describes, but does offer “specific examples.” One is using time of flight and structured light, where a known pattern is projected onto the environment and the time taken to recognize that pattern provides depth detail.

“In other examples, the depth sensor can be a radar detection and range (RADAR) sensor or a light detection and range (LIDAR) sensor,” says Apple. “It should be noted that one or more types of depth sensors can be used, for example incorporating one or more than a structured light sensor, a time-of-flight camera, a RADAR sensor and / or a LIDAR sensor.”

Apple’s HMD could also use “ultrasonic sound waves,” but regardless of what the headphones produce, the patent application is about accurately measuring the environment and transmitting that information to the user.

“The [HMD’s] The controller determines the graphic content according to the detection of the environment with one or more of the infrared sensor or the depth sensor and the ultrasonic sensor, “says Apple”, and operates the screen to provide the graphic content at the same time as the detection of the environment. ”

This patent application is credited to four inventors, each of whom has linked previous work. That includes Trevor J. Ness, who has credits in AR and VR tools for facial mapping.

It also includes Fletcher R. Rothkopf, who has a previous patent related to providing AR and VR headsets to “Apple Car” passengers, without making them sick.

That’s a problem for all head-mounted displays, and alongside this low-light patent application, Apple now has one related to the use of gaze tracking to reduce potential disorientation and consequently motion sickness. movement.

Gaze tracking for mixed reality images

“Gaze Tracking Image Enhancement Devices” is another recently disclosed patent application, this time focusing on presenting AR images when a user may be shaking their head.

“It can be challenging to present mixed reality content to a user,” explains Apple. “The presentation of mixed reality images to a user can, for example, be interrupted by movement of the head and eyes.”

“Users can have different visual abilities,” he continues. “If you are not careful, mixed reality images will be presented that cause disorientation or dizziness and that make it difficult for the user to identify items of interest.”

Depending on where the user looks, “Apple Glass” could alter the images to avoid motion sickness.

The proposed solution is, in part, to use what sounds like an idea very similar to LiDAR assist in low light environments. A headset or other device “can also collect information about the real-world image, such as information about the content, movement, and other attributes of the image by analyzing the real-world image.”

That is in addition to what the device knows about “a user’s vision information, such as the user’s visual acuity, contrast sensitivity, field of view, and geometric distortions.” Along with the preferences that the user can set, the mixed reality image can be presented in a way that minimizes disorientation.

“[As] For example, a part of a real-world image can be magnified for a user with low vision only when the user’s point of view is relatively static to avoid inducing dizzy effects, “says Apple.

This patent application is credited to four inventors, including Christina G. Gambacorta. Previously, it had been included in a related patent related to the use of gaze tracking in “Apple Glass” to balance image quality with battery life by altering the resolution of what the user is looking at.