[ad_1]

The most popular GPU among Steam users today, NVIDIA’s venerable GTX 1060, is capable of 4.4 teraflops, the soon-to-be usurped 2080 Ti can handle around 13.5, and the upcoming Xbox Series X can handle 12. These numbers are calculated by taking the number of shader cores on a chip, multiplying it by the card’s maximum clock speed, and then multiplying that by the number of instructions per watch. In contrast to many numbers that we see in the PC space, it’s a fair and transparent calculation, but that doesn’t make it a good measure of gaming performance.

Almost every GPU family arrives with these generational achievements

AMD’s RX 580, a 6.17 teraflop GPU from 2017, for example, performs similarly to the RX 5500, a budget 5.2 teraflop card that the company released last year. This kind of “hidden” upgrade can be attributed to many factors, from architectural changes to new features being used by game developers, but almost every GPU family comes with these generational gains. That’s why the Xbox Series X, for example, is expected to outperform the Xbox One X by more than the “12 vs. 6 teraflop” figures suggest. (The same goes for the PS5 and PS4 Pro.)

The point is, even within the same GPU company, each year, changes in the way chips and games are designed make it harder to discern what exactly “a teraflop” means for gaming performance. . Take an AMD card and an NVIDIA card of either generation and the comparison is even less valuable.

All of which brings us to the RTX 3000 series. These came with some really impressive specs. The RTX 3070, a $ 500 card, has 5,888 cuda cores (NVIDIA’s name for shader) with a capacity of 20 teraflops. What about the new $ 1,500 flagship card, the RTX 3090? 10,496 cores, for 36 teraflops. For context, the RTX 2080 Ti, as of now the best “consumer” graphics card available, has 4,352 “cuda cores”. NVIDIA, then, has increased the number of cores in its flagship by more than 140 percent and its teraflops capacity by more than 160 percent.

Well, it has and it hasn’t.

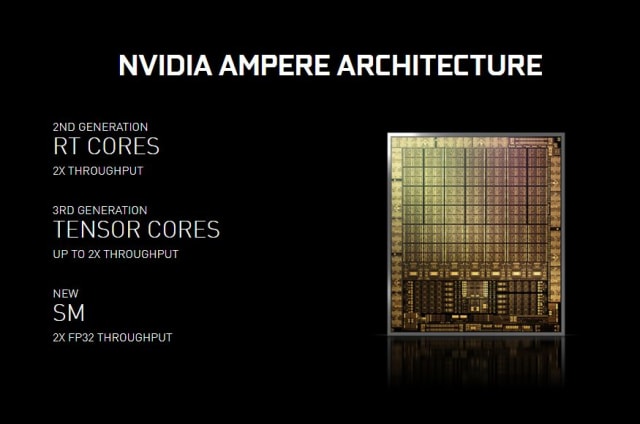

NVIDIA cards are made up of many “streaming multiprocessors” or SMs. Each of the 2080 Ti’s 68 SM “Turing” contains, among many other things, 64 cuda “FP32” cores dedicated to floating-point mathematics and 64 “INT32” cores dedicated to integer mathematics (integer calculations) .

The big innovation in the Turing SM, in addition to artificial intelligence and ray tracing acceleration, was the ability to run integer and floating-point math simultaneously. This was a significant change from the previous generation, Pascal, where the core banks would switch between integers and floating point on one or the other basis.

NVIDIA

The RTX 3000 cards are based on an architecture that NVIDIA calls “Ampere”, and their SM, in a way, takes both the Pascal and Turing approaches. Ampere keeps the 64 FP32 cores as before, but the other 64 cores are now designated “FP32 and INT32 “. So half of Ampere’s cores are dedicated to floating point, but the other half can perform integer or floating point math, just like in Pascal.

With this switch, NVIDIA now counts that each SM contains 128 FP32 cores, instead of the 64 that Turing had. The “5,888 cuda cores” of the 3070 are best described as “2944 cuda cores and 2944 cores that can be cuda “.

As games have become more complex, developers have started to rely more on whole numbers. An NVIDIA slide from the original RTX 2018 release suggested that whole math, on average, made up about a quarter of the GPU operations in the game.

The downside to the Turing SM is the potential for underutilization. If, for example, a workload is 25 percent whole math, about a quarter of the GPU cores could be sitting around with nothing to do. That’s the thinking behind this new semi-unified core structure, and on paper it makes perfect sense – you can still run integer and floating point operations simultaneously, but when those integer cores are idle, they can run floating point operations instead. .

[This episode of Upscaled was produced before NVIDIA explained the SM changes.]

At the launch of NVIDIA’s RTX 3000, CEO Jensen Huang said the RTX 3070 was “more powerful than the RTX 2080 Ti.” Using what we now know about the Ampere design, integers, floating point, clock speeds, and teraflops, we can see how things will play out. At that “25 percent integer” workload, 4,416 of those cores could be running FP32 math, with 1,472 handling the necessary INT32.

Along with all the other changes that Ampere brings, the 3070 could outperform the 2080 Ti by perhaps 10 percent, assuming the game doesn’t mind having 8GB instead of 11GB of memory to work with. In the absolute worst (and highly unlikely) case, where a workload is extremely dependent on integers, it might behave more like 2080. On the other hand, if a game requires very little integer math, the boost over the 2080 Ti could be huge.

Conjecture aside, we have a point of comparison so far: a Digital Foundry video comparing the RTX 3080 to the RTX 2080. DF saw a 70 to 90 percent increase between generations in various games that NVIDIA submitted for testing, with a larger performance gap in titles that use RTX features like ray tracing. That range gives an idea of the kind of variable performance gain we would expect given the new shared cores. It will be interesting to see how a larger set of games performs, as NVIDIA has likely done its best with the selection of licensed games. What you won’t see is the nearly 3x improvement from the 2080 teraflop figure to the 3080 teraflop figure.

With the first RTX 3000 cards arriving in weeks, you can expect the reviews to give you a firm idea of Ampere’s performance soon. Although even now it feels safe to say that Ampere represents a monumental leap for PC gaming. The $ 499,3070 is likely trading hits with the current flagship, and the $ 799,3080 should offer more than enough performance for those who might have opted for the “Ti” previously. Regardless of how these cards are lined up, it’s clear that their value can no longer be represented by a singular figure such as teraflops.