[ad_1]

-

It turns out that Huang was cooking eight A100 GPUs, two 64-core Epyc 7742 CPUs, nine Mellanox interconnects, and various assortments like RAM and SSD. Mmmm, like grandma used to do.

-

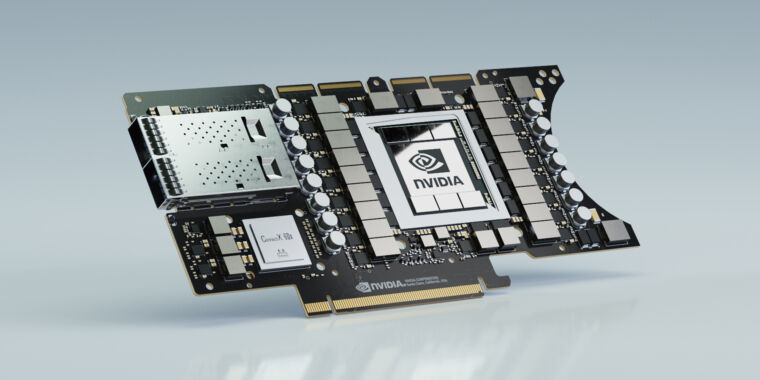

Looking at an A100 GPU without its huge heatsink installed makes us feel a bit uncomfortable.

-

This fully assembled DGX A100 node looks oddly alone, on its own with no racks, cables, and flashing lights.

This morning everyone found out what CEO Jensen Huang was kitchen: an Ampere successor to Volta’s DGX-2 deep learning system.

Yesterday, we described the mysterious hardware in Huang’s kitchen as “packing some Xeon CPUs” in addition to the new Tesla v100 GPU successor. The egg is in our face for that: The new system includes a pair of 64-core, 128-thread AMD Epyc 7742 CPUs, along with 1TiB of RAM, a pair of NVMe 1.9TiB SSDs in RAID1 for a boot drive, and up to four NVMe PCIe4.0 3.8TiB drives in RAID 0 as secondary storage.

Goodbye Intel, hello AMD

-

It seems highly likely that Nvidia did not want to financially support Intel’s plans to develop its own profitable deep learning territory.

-

The Intel DG1 at least exists, but its leaked benchmarks don’t look impressive, hinting at performance similar to that of years old Nvidia GPUs.

-

We’ve heard rumors of a Xe DG2, but all we’ve seen so far are conceptual representations, not actual hardware.

Technically, it shouldn’t come as too much of a surprise that Nvidia used AMD for CPUs on its iconic machine learning nodes: Epyc Rome has been kicking the line of Intel’s Xeon server CPU for quite some time. Staying technical, Epyc 7742 support for PCIe 4.0 may have been even more important than its high CPU speed and large number of cores / threads.

GPU-based machine learning frequently blocks storage, not CPU. The M.2 and U.2 interfaces used by the DGX A100 use 4 PCIe lanes, which means that changing from PCI Express 3.0 to PCI Express 4.0 means doubling the available storage bandwidth from 128GiB / sec to 256GiB / sec by individual SSD.

There may also have been a bit of politics lurking behind the decision to switch CPU providers. AMD may be Nvidia’s biggest competitor in the relatively low-margin consumer graphics market, but Intel is concentrating on the market side of the data center. For now, Intel’s discrete GPU offerings are mostly steam, but we know Chipzilla has much bigger and grander plans as it shifts its focus from the dying consumer CPU market to everything related to the data center.

Intel DG1 itself, which is the only real hardware we’ve seen so far, has leaked benchmarks that make it compete with the integrated Vega GPU of a Ryzen 7 4800U. But Nvidia might be more concerned with the HP 4-tile Xe GPU, whose 2048 UEs (execution units) could offer up to 36TFLOPS, which would at least be at the same stage as the Nvidia A100 GPU that powers the DGX unveiled today.

DGX, HGX, SuperPOD and Jetson

The DGX A100 was the star of today’s announcements: It’s a standalone system with eight A100 GPUs, with a GPU memory of 40TiB each. The Argonne National Laboratory of the US Department of Energy. USA You are already using a DGX A100 for COVID-19 research. The system’s nine 200 Gbps Mellanox interconnects allow grouping multiple DGX A100s, but those whose budget will not be compatible a lot of the $ 200,000 GPU nodes can be achieved by dividing A100 GPUs into up to 56 instances each.

For those who do They have the budget to buy and bundle lots of DGX A100 nodes, they are also available in HGX (Hyperscale Data Center Accelerator) format. Nvidia says that a “typical cloud cluster” made up of its previous DGX-1 nodes along with 600 separate CPUs for inference training could be replaced by five DGX A100 units, capable of handling both workloads. This would condense the hardware from 25 racks to one, the power budget from 630kW to 28kW, and the cost of $ 11 million to $ 1 million.

If the HGX still doesn’t sound big enough, Nvidia has also released a reference architecture for its SuperPOD, unrelated to Plume. Nvidia’s SuperPOD A100 connects 140 DGX A100 and 4PiB nodes of flash storage over 170 Infiniband switches, and offers 700 petaflops of artificial intelligence performance. Nvidia has added four of the SuperPODs to its own SaturnV supercomputer, which, at least according to Nvidia, makes SaturnV the world’s fastest artificial intelligence supercomputer.

Finally, if the data center is not your thing, you can have an A100 in your perimeter computing, with Jetson EGX A100. For those unfamiliar, Nvidia’s Jetson single-board platform can be thought of as a Raspberry Pi on steroids – they are deployable in IoT scenarios but bring significant processing power to a small form factor that can be rugged and integrated into Edge devices like robotics, healthcare and drones.

Nvidia listing image