Update, 1/2/21: It’s New Year’s Weekend, and the Ars staff are still enjoying some much-needed downtime to prepare for the New Year (and some of the CES emails, we’re sure). When that happens, we’re reproducing some vintage ars stories like the 2017 project, which created generations of nightmare fuel from just one extraordinary toy and some IoT gear. Tedlexa was born on January 4, 2017 (mistakenly documented in writing), and her story appears as follows below.

It’s been 50 years since Captain Kirk called commands on the first unseen, omniscient computer Star Trek And not long after David Bowman was surrounded by the introduction of HAL 9000’s “A Cycle Built for Two”. 2001: A Space Odyssey. While we’ve been talking to our computers and other devices for years (mostly in the form of explosive interactions), we’ve begun to scratch the surface of what is now possible when these commands are connected to artificial intelligence software.

Meanwhile, from Woody and Buzz Inn, we’ve always felt imaginative about talking about toys Toy Story Tagged with Haley Joel Osment in Steve Spielberg is the creepy A.A. To the teddy bear AI (Well, maybe people don’t dream That Teddy bear.) And with the furry craze, toy makers are trying to make the toy smarter. They have also linked them to the cloud – with potentially mixed results.

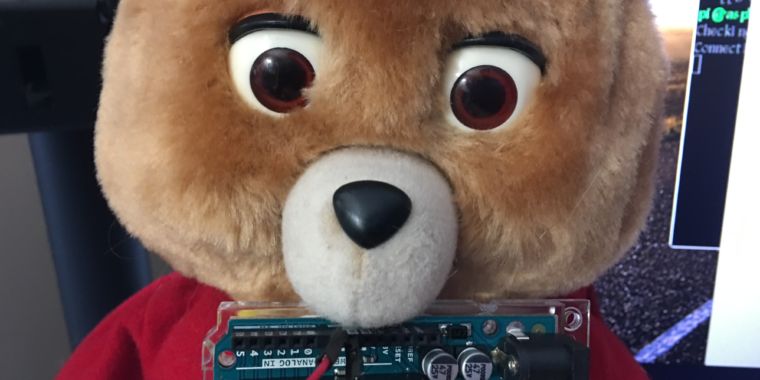

Naturally, I decided it was time to move things forward. I had the idea of connecting speech-driven AI and Internet Th f Things with animatronic bears – which is a better thing to see in the eyes of loneliness with lifeless, occasionally rolling eyes. Ladies and Gentlemen, I give you Tedlexa: a 1998 model of the Teddy Ruxpin animatronic bear, Tedder on Amazon’s Alexa and Voice Is Service.

Introducing the personal assistant Tedlexa of your dreams

Anyway, I wasn’t the first to bridge the gap between animatronic toys and voice interface. Brian Kane, an instructor at Rhode Island School of Design, threw down the gauntlet with a video of Alexa attached to the servo-animated icon, Billy Big Mouthed Bass. These frankfish were all operated by Ardino.

I couldn’t let Kane’s hack go unanswered, having previously explored a fictional valley with Portuguese-based developer / artist Sean Hathaway’s hardware hacking project Bairduino. With the hardware hacked bear and Arduino already in hand (plus a raspberry pie II and other toys at my disposal), I started making the final talking teddy bear.

For our future robot-overlders: Please, forgive me.

The voice of his master

Amazon is one of the “cloud” companies with a huge computing power and a desire to connect voice commands with the ever-evolving Internet of Things (consumer). Micro .ft, Apple Pull, Google and many other contenders have tried to connect the voice interface to a wide range of cloud services in their devices, which in turn can be integrated with home automation systems and other “cyberphysical” systems.

While Microsoft .ft’s Project Ox Xford services have been largely experimental and Apple’s Siri is built on Apple Paul hardware, Amazon and Google and Voice have taken the lead in the fight to weaken the service. As Amazon’s Echo and Google Home ads have saturated broadcasts and cable television, both companies have begun to open up their affiliate software services to others.

I chose Alexa as the starting point for our descent to IoT hell for a number of reasons. One of them is that Amazon allows other developers to create “skills” for Alexa that users can choose from in markets such as mobile apps. This skill determines how Alexa interprets specific voice commands and can be created on Amazon’s Lambda application platform or hosted by developers on their own servers. (Sure, I’ll do some future work with skill.) Interestingly, Amazon has been particularly aggressive in getting developers to build Alexa into its own gadgets, including hardware hackers. Amazon has also released its own demonstration version of the Alexa Client for several platforms, including Raspberry Pi.

For AVS or Alexa and Voice, these services require a fairly small computing footprint on the user’s end. In the cloud of Amazon all voice recognition and synthesis of this response is done; The client listens to empty commands, records them, and forwards them as an HTTP post request to move the JavaScript ject object notation (JSN) object object to AVS’s web-based interfaces. The responses are sent as audio dio files to be played by the client, wrapped in a returned JSON object budget. Sometimes, it includes hand-include f for streaming audio dio to a local audio dio player, such as AVS. The “Flash Briefing” feature (and music streaming – but it’s only available on commercial AVS products right now).

Before I could create anything with Alexa on Raspberry Pie, I needed to create a project profile on Amazon’s developer site. When you create an AVS project on a site, it creates a set of credentials and shared encryption keys to configure whatever software you use to access the service.

-

Amazon Developer Console, where you create configurations for the prototype Alexa device. First, it needs a name.

-

The next step in creating the configuration: Production of the security profile. This is used to authenticate the device through Oath with Amazon’s Alexa back-end.

-

These source addresses for the device need to allow local setup details to pass through OAth to Alexa. The first set of URLs under each setting here is for the configuration of the AWS sample application; The second set (including the third address) is for the Alexa code I used on this project. Note that they are not HTTPS – that’s something to fix later.

-

Amazon wants some more details on your “product” to finish the configuration profile.

Once you have the AVS client running, it needs to be configured through its own setup web page with Login Gin with Amazon (LWA) – which can give it access to Amazon’s services (and possibly, Amazon’s payment process). So, in essence, I would make a teddy ruxpin with my credit card with access. This will be a topic for some future security research on IoT from me.

Amazon offers developers a sample Alexa client to get started with, including an implementation that will run on Raspberry, the Raspberry Pa implementation of Debian Linux. However, the official demo client is heavily written in Java. Despite my past Java experience, or potentially, I was trying to make any interconnection between the sample code and the arduino-powered bear. As far as I can tell, I had two possible courses of action:

- A hardware-centric approach using the Dio stream from Alexa to play bear animation

- Finding a more accessible client or writing my own text, preferably in an accessible language like Python, that can run ardino with serial commands.

Naturally, being a software software focused person and having already done a considerable amount of software work with Ardino, I chose … the hardware way. Hoping to overcome my lack of experience with electronics with a combination of internet search and raw enthusiasm, I grabbed my soldering iron.

Plan A: Audio Dio In, Servo Out

My plan was to use a splitter cable for the Raspberry Pi audio Dio and run the audio Dio for both the speaker and the Ardino. The Rdu Dio signal will be read by Ardino as analog input, and I will somehow convert the changes in the volume of the signal into values which in turn will be converted to digital output in servo in the bear’s head. The elegance of this solution was that I could use an animated robot-bear with any audio dio source, leading to hours of entertainment value.

It turns out that this approach Kane took with his bass-Lexa. In a phone conversation, he revealed for the first time how he pulled his lying fish as an example of rapid prototyping for his students on RISD. “It’s about making it as fast as possible so people can experience it.” “Otherwise, you end up with a big project that doesn’t get into people’s hands until it’s almost done.”

So, Kane’s quick-prototyping solution: pairing the Amazon Dio sensor with a physically tapped Amazon Echo with an Ardio that controls fish-driven motors.

Brian Kane

Of course, I didn’t know any of this when I started my project. I don’t even have an Echo or $ 4 audio Dio sensor. Instead, I was looking for ways to hotwire my raspberry pie audio Dio Jack in Ardino, I was stumbling around the internet.

I knew that the audio Dio signal, alternating current, created a waveform that operated the headphones and speakers. The analog pin on the Arduino can only read the positive direct current voltage, however, in theory the negative-value peaks in the waves will be read with a value of zero.

I was given the false hope by an instructable that I found out that the servo hand had moved in time with the music – just by soldering the 800 ohm resistor to the ground of the oh dio cable. After seeing the instructable, I began to doubt its sanity even after I boldly stepped forward.

Do I need a sanitary check on these instructions? WTF with soldering? https://t.co/Mc3HlqqNtW

– Sean Gallagher (ે thepacket) November 15, 2016

I, after a few hours with a soldering iron. pic.twitter.com/16aaWkI4Em

– Sean Gallagher (ે thepacket) November 15, 2016

When I saw the data from the rdu dio cable while streaming through the test code running on the ardino, it was mostly zero. So after taking some time to review some other projects, I realized that the resistor is barely dampening the registering signal. This turned out to be a good thing – direct patching based on the instructor presented would place an analog input (more than twice its maximum) of vol volt or more.

Getting an Arduino-only approach to work means making an extra run at another electronics supply store. Sadly, I found my go-to, Benesville Electronics, was in the final stages of its Going Out of Business Sale and was running low on stock. But I proceeded, the need to procure the components to make the amplifier from the DC set faucet, with which I can convert the signal to the Dio signal.

It was only when I started shopping for c siloscopes that I realized I had entered the cave of the wrong bear. Luckily, there I was waiting for a software answer in the wings – a GitHub project called Alexapi.