Mitev et al.

As the popularity of Amazon Alexa and other voice assistants grows, so does the number of ways those assistants act and can interfere with users’ privacy. Examples include hacks that use lasers to surreptitiously unlock connected doors and start cars, malicious assistant apps that eavesdrop and steal passwords, and discussions that are surreptitiously and routinely monitored by vendor employees or cited for use in criminal trials. Now, researchers have developed a device that may one day allow users to regain their privacy by warning when these devices are mistakenly or intentionally snooping on people nearby.

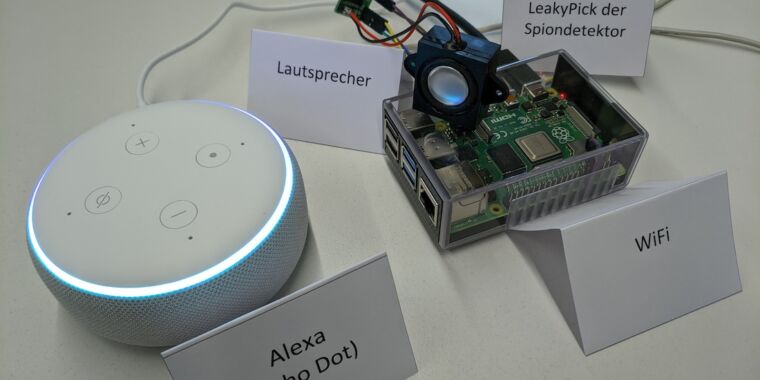

LeakyPick is placed in various rooms in a home or office to detect the presence of devices that transmit audio close to the Internet. By periodically emitting sounds and monitoring subsequent network traffic (can be configured to send sounds when users are away), the ~ $ 40 prototype detects audio streaming with 94 percent accuracy. The device monitors network traffic and provides an alert whenever identified devices transmit ambient sounds.

LeakPick also tests devices to detect false positives for trigger words – that is, words that incorrectly trigger attendees. So far, the researchers’ device has found 89 words that unexpectedly caused Alexa to stream audio to Amazon. Two weeks ago, a different team of researchers published more than 1,000 words or phrases that produce false triggers that make devices send audio to the cloud.

“For many privacy-conscious consumers, having voice assistants connected to the Internet [with] microphones scattered around their homes is a troubling prospect, despite the fact that smart devices are a promising technology for improving home automation and physical security, “said Ahmad-Reza Sadeghi, one of the researchers who designed the device. , in an email. ” the device identifies smart home devices that record and send audio to the Internet unexpectedly and warns the user about it. “

Recovering the user’s privacy

Voice-controlled devices typically use local voice recognition to detect trigger words, and for use, the devices are often programmed to accept similarly sounding words. When a nearby statement resembles a trigger word, attendees send audio to a server that has more complete speech recognition. In addition to falling for these inadvertent broadcasts, attendees are also vulnerable to hacks that deliberately trigger trigger words that send audio to attackers or perform other tasks that compromise security.

In an article published earlier this month, Sadeghi and other researchers, from Darmstadt University, Paris Saclay University, and North Carolina State University, wrote:

The objective of this document is to devise a method for regular users to reliably identify IoT devices that 1) are equipped with a microphone and 2) send recorded audio from the user’s home to external services without their knowledge. If LeakyPick can identify which network packets contain audio recordings, it can inform the user which devices are sending audio to the cloud, since the source of the network packets can be identified by hardware network addresses. This provides a way to identify both unintentional audio streams to the cloud and the attacks mentioned above, where adversaries seek to invoke specific actions by injecting audio into the device environment.

Achieving all of that required investigators to overcome two challenges. The first is that most of the wizard traffic is encrypted. This prevents LeakyPick from inspecting packet loads for audio codecs or other signs of audio data. Second, with new and unseen voice assistants coming out all the time, LeakyPick also has to detect audio streams from devices without prior training for each device. Previous approaches, including one called HomeSnitch, required advanced training for each device model.

To remove obstacles, LeakyPick periodically streams audio in a room and monitors the resulting network traffic from connected devices. By temporarily mapping the audio probes to the observed characteristics of the following network traffic, LeakyPick lists the connected devices that are likely to transmit audio. One way the device identified probable audio streams is by looking for sudden bursts of outgoing traffic. Voice-activated devices generally send limited amounts of data when they are idle. A sudden increase generally indicates that a device has been activated and is sending audio over the Internet.

The use of explosions is only prone to false positives. To eliminate them, LeakyPick uses a statistical approach based on a two-sample independent t-test to compare the characteristics of a device’s network traffic when it is idle and when it responds to audio probes. This method has the added benefit of working on devices that researchers have never analyzed. The method also allows LeakyPick to work not only for voice assistants using trigger words, but also for security cameras and other Internet of Things devices that transmit audio without trigger words.

The researchers summarized their work this way:

At a high level, LeakyPick overcomes the challenges of research by periodically streaming audio to a room and monitoring subsequent network traffic from devices. As shown in Figure 2, the main component of LeakyPick is a sounding device that emits audio probes in its vicinity. By temporarily mapping these audio probes to the observed characteristics of downstream network traffic, LeakyPick identifies devices that have potentially reacted to audio probes by sending audio recordings.

LeakyPick identifies network streams containing audio recordings using two key ideas. First, look for bursts of traffic after an audio probe. Our observation is that voice-activated devices generally don’t send a lot of data unless they are active. For example, our analysis shows that when idle, Alexa-enabled devices periodically send small bursts of data every 20 seconds, medium bursts every 300 seconds, and large bursts every 10 hours. We further discovered that when triggered by an audio stimulus, the resulting audio streaming burst has different characteristics. However, the use of traffic bursts only produces high false positive rates.

Second, LeakyPick uses statistical probing. Conceptually, it first records a baseline measurement of idle traffic for each monitored device. It then uses an independent two-sample t-test to compare the characteristics of the device’s network traffic while idle and the traffic when the device communicates after the audio probe. This statistical approach has the advantage of being inherently device independent. As we showed in Section 5, this statistical approach works as well as machine learning approaches, but is not limited by a priori knowledge of the device. Therefore, it outperforms machine learning approaches in cases where there is no pre-trained model for the specific device type available.

Finally, LeakyPick works both for devices that use a trigger word and for devices that don’t. For devices like security cameras that do not use a trigger word, LeakyPick does not need to perform any special operations. Streaming any audio will trigger streaming audio. To handle devices that use a word or alarm clock sound, for example voice assistants, security systems that react to glass breakage or dog barking, LeakyPick is configured to prefix its probes with familiar alarm clock words and sounds (for example , “Alexa”, “Hey Google”) Can also be used to fuzzily test trigger words to identify words that will unintentionally transmit audio recordings.

Protection against accidental and malicious leaks.

So far LeakyPick, named after its mission to detect audio loss from network-connected devices, has discovered 89 non-trigger words that can cause Alexa to send audio to Amazon. With more use, LeakyPick is likely to find additional words in Alexa and other voice assistants. Researchers have already found several false positives on Google Home. The 89 words appear on page 13 of the document linked above.

In addition to detecting unintentional audio streams, the device will detect virtually any activation of a voice assistant, including malicious ones. An attack demonstrated last year caused the devices to unlock doors and start cars when connected to a smart home by laser-lighting the Alexa, Google Home and Apple Siri devices. Sadeghi said that LeakyPick would easily detect such a trick.

The hardware prototype consists of a Raspberry Pi 3B connected by Ethernet to the local network. It is also connected by a headphone jack to a PAM8403 amplifier board, which in turn connects to a single generic 3W speaker. The device captures network traffic using a TP-LINK TL-WN722N USB Wi-Fi security device that creates a wireless access point using hostapd and dnsmasq as a DHCP server. All nearby wireless IoT devices will connect to that access point.

To give LeakyPick internet access, the researchers turned on packet forwarding between Ethernet (connected to the network gateway) and wireless network interfaces. The researchers wrote LeakyPick in Python. They use tcpdump to record packets and Google’s text-to-speech engine to generate the audio played by the test device.

With the increasing use of devices that transmit nearby audio and the growing corpus of ways that they can fail or be hacked, it is good to see research proposing a simple and inexpensive way to repel leaks. Until devices like LeakyPick are available, and even after that, people should carefully ask themselves if the benefits of voice assistants are worth it. When attendees are present, users should keep them turned off or disconnected, except when they are in active use.