[ad_1]

Some social networks have already implemented policies specifically against misinformation about the Covid-19 vaccine; others are still deciding on the best approach or are relying on existing policies for Covid-19 and vaccine-related content. But making a policy is the easy part – consistently enforcing it is where platforms often fall short.

Facebook, Twitter and other platforms have a lot of work ahead of them: the coronavirus and pending vaccines have already been the subject of numerous conspiracy theories, on which the platforms have taken action or created policies. Some have made false claims about the effectiveness of the masks or unsubstantiated claims that people who get vaccinated will be microchipped.

Earlier this month, Facebook launched a large private group dedicated to anti-vaccine content. But many groups remain dedicated to criticizing vaccines. A cursory search of CNN Business found at least a dozen Facebook groups advocating against vaccines, with memberships ranging from a few hundred to tens of thousands of users. At least one group specifically targeted opposition to a Covid-19 vaccine.

Brooke McKeever, an associate professor of communications at the University of South Carolina who has studied vaccine misinformation and social media, expects an increase in anti-vaccine content, calling it a “big problem.”

“The speed at which [these vaccines] they were developed is a concern for some people, and the fact that we don’t have a history with this vaccine, people are going to be scared and unsure about it, “he said.” They may be more likely or more likely to believe information because of it. “

That has consequences in the real world. McKeever’s fear: that people will not get the vaccine and that Covid-19 will continue to spread.

The report said that social media platforms have done the “absolute minimum.”

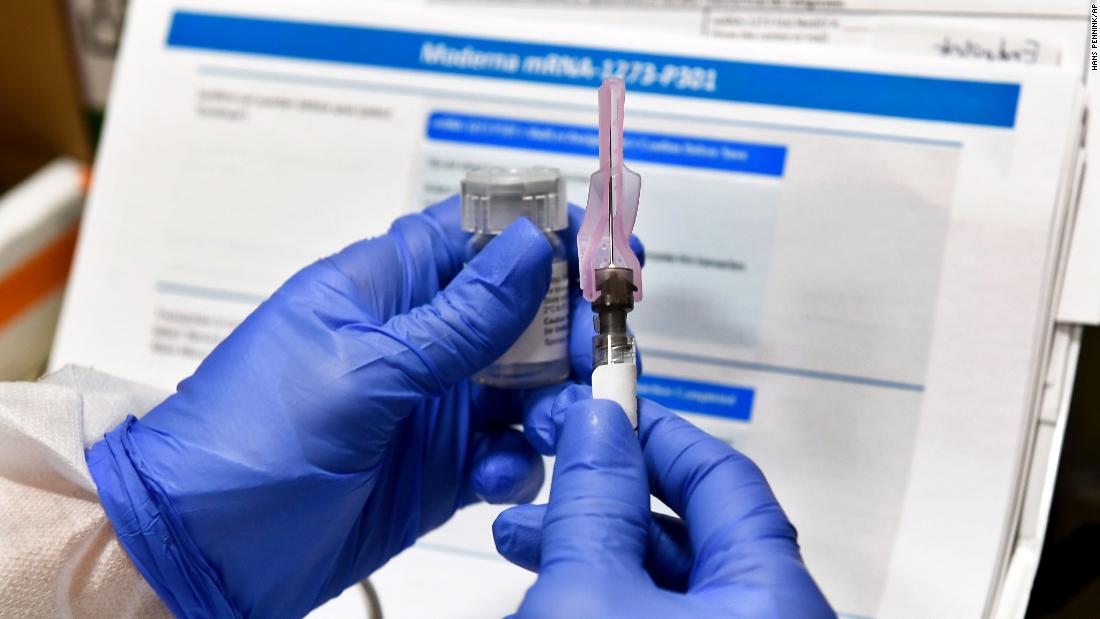

This is where the platforms to combat misinformation about the Covid-19 vaccine lie so far.

Facebook and Instagram

“We allow content that discusses Covid-19 related studies and vaccine trials, but we will remove claims that there is a safe and effective vaccine for Covid-19 until such a vaccine is approved by global health authorities,” a Facebook spokesperson said. “We also reject advertisements that discourage people from getting vaccinated.”

A Twitter spokesperson said the company is still working on its policy and product plans before “a viable and medically approved vaccine” becomes available.

Since 2018, the company has added a message that directs users to a public health resource when their search is related to vaccines. In the US, it directs people to vaccines.gov.

Youtube

A YouTube spokesperson said it will continue to monitor the situation and update policies as necessary.

Tik Tok

TikTok said it removes misinformation related to Covid-19 and vaccines, including anti-vaccine content. The company said it does so proactively and through its users who report content.

TikTok also works with fact-checkers, including Politifact, Lead Stories, SciVerify, and AFP to help assess the accuracy of content.

In videos related to the pandemic, regardless of whether they are misleading or not, TikTok has a tag that reads “Get the Facts about Covid-19”, which leads to a hub with information from sources like the World Health Organization.

[ad_2]