Today, Deepmind announced that it has largely solved one of the remaining problems of biology: how the word amino acids in proteins are transformed into three-dimensional shapes that enable their complex functions. This is a computational challenge that, despite the application of supercomputer computer-hardware for these calculations, has resisted the efforts of many very smart biologists over the decades. Instead Deepmind trained its system using 128 specialized processors for a few weeks; It now returns potential structures in a few days.

The limitations of the system are not yet clear – Deepmind says it is currently considering a peer-review paper and has only created one blog post and several press releases are available. But, after more than doubling the system’s performance in just four years, the system is performing better than anything before. Even if it is not useful in every circumstance, the possible implication is that predicting the composition of many proteins will now be able to do nothing but the DNA sequence of the genes that encode them, which would mark a major change for biology.

Between folds

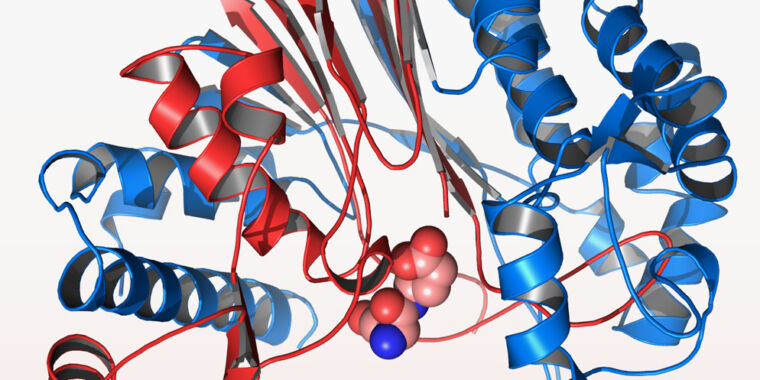

To make a protein, our cells (and every other organism) chemically combine to form a chain of amino acids. This works because each amino acid shares a backbone that can be chemically attached to form a polymer. But each of the 20 amino acids used by life has a different set of molecules attached to that backbone. These can be charge or neutral, acidic or basic, etc., and these properties determine how each amino acid interacts with its neighbors and the environment.

The interactions of these amino acids determine the three-dimensional structure that adapts to it after chain formation. Hydrophobic amino acids end up in the interior of the structure to avoid a watery environment. Positive and negatively charged amino acids attract each other. Hydrogen bonds drive the formation of regular spiral or parallel sheets. Collectively, these drives can be an otherwise awkward chain to fold in the command format. And that in turn determines the behavior of the protein, the ordered structure, by which it acts as a catalyst, binds to DNA, or drives muscle contraction.

Determining the order of amino acids in a protein chain is relatively easy. – The DNA in the gene in which they encode proteins is determined in the base order. And just as we’ve gotten so good at indexing the whole genome, we’ve had a huge increase in gene sequences and so now we have a huge surplus of protein sequences available. For many of them, however, we do not know what folded proteins look like, which makes it difficult to determine how they function.

Given that the back of the protein is very flexible, almost any two amino acids of the protein can have potential contact with each other. So finding out what issues actually interact in folded proteins, and how that interaction reduces the free energy of the final configuration, once the number of amino acids becomes too large it becomes an intractable computational challenge. Essentially, when any amino acid can occupy any potential coordinates in a 3D space, it will be difficult to figure out what to put there.

Despite the difficulties, there has been some progress through the gamble of distributed computing and folding. But in the ongoing, bilingual phenomenon known as Critical Assessment Protein F Protein Structure Prediction (CASP) there has been a very erratic progress in its existence. And in the absence of a successful algorithm, people abandon the difficult task of refining proteins and then creating a refined form using X-ray diffraction or cryoelectron microscopy, can take years in strenuous effort.

Deepmind has entered the arena

Deepmind is an AI company that was acquired by Google in 2014. Since then, he has created several splash, evolving systems that have successfully taken on go, chess and even humans. Starcraft. In his many notable successes, he was trained by providing the rules of the game before adjusting loosely to play system.

That system is incredibly powerful, but it’s not clear if it will work for protein folding. For one thing, there is no clear external standard for “victory” – if you find a structure with a very low energy charge, it will not guarantee that there is something less. Not much in the way of rules either. Yes, free energy will reduce energy if opposite amino acids are next to each other. But that won’t happen if it comes at the cost of sticking dozens of hydrogen bonds and hydrophobic amino acids into the water.

So how do you adapt AI to work under these conditions? For their new algorithm called alphafold, the Deepmind team considered proteins as spatial network graphs, each amino acid as a node and the connections between them, mediated by their proximity to the folded protein. AI itself is then trained on the configuration and strength of these connections by feeding it pre-determined compositions of 170,000 proteins obtained from public databases.

When a new protein is given, Alphafold searches for any protein with any related protein, and aligns the respective parts of the sequence. It also explores proteins with known structures that have similar regions. Typically, these approaches are best for optimizing the local features of the structure but not so great in predicting the overall protein structure that – a set of highly optimized optimized fragments does not produce the best together. And this is where the overall focus-based ga deep-study portion was used to ensure the overall composition is consistent.

Clear success, but with limitations

For this year’s CASP, algorithms from Alphafold and other entrants were placed loose on a range of proteins that were either unresolved (and the challenge was solved as they progressed) or were solved but have not yet been published. So there was no way for the creators of the algorithms to build real-world information systems, and the output of the algorithms can be compared to the best real-world data as part of the challenge.

Alphafold has done totally better than any other entry. For about two-thirds of the proteins that predicted its composition were within the experimental error you would find if you tried to mimic a structural study in a laboratory. Overall, on an evaluation of accuracy from zero to 100, its average is 92 of the range, the range that you will see if you tried to achieve the structure twice in two different situations.

By any reasonable standard, the computational challenge of protein synthesis has been solved.

Unfortunately, there are a lot of unreasonable proteins. Some immediately get stuck in the membrane; Others prefer chemical changes quickly. Others also need extensive interactions with specific enzymes that burn energy to force them to resell other proteins. In all likelihood, Alphafold will not be able to handle all of these edge cases, and without an academic paper describing the system, the system will take some time to discover its limitations and use some real-world. It is not to turn away from an incredible achievement, just to warn against unreasonable expectations.

Now the key question is how quickly the system will be made available to the biological research community so that its limitations can be determined and we can start putting it to use in cases where it works well and has significant value, e.g. Structure. Mutable forms of pathogens found in proteins or cancerous cells.