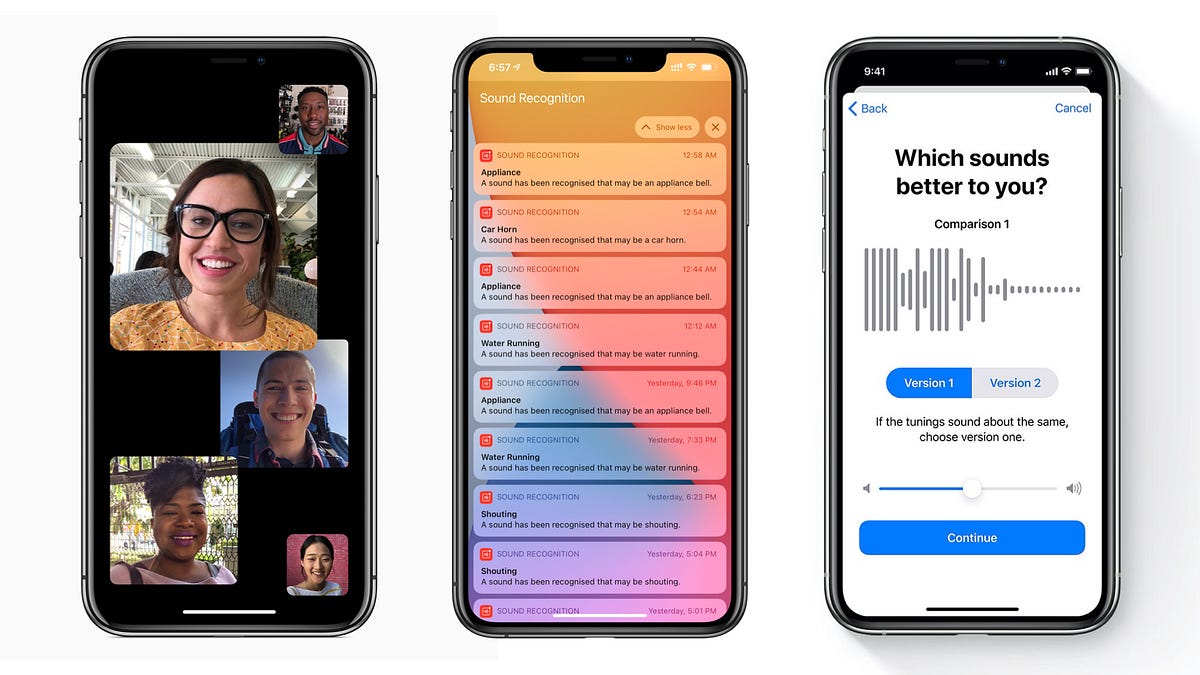

Group FaceTime sign language detection, sound recognition, and headphone adaptations

TThe all-new 2020 World Online Developer Conference (WWDC) concluded recently this week, announcing some of the most important updates for popular Apple platforms. In particular, the new features coming to iOS 14, iPadOS 14, watchOS 7 and macOS Big Sur.

Accessibility is one of Apple’s core values and accessibility design is taken very seriously. Undoubtedly, they remain focused on ensuring that their software and hardware products are as accessible and inclusive to as many users as possible. Last year with iOS 13, they introduced some of the most significant new accessibility features in their products, such as Voice Control: being able to use the device entirely only with their voice, a huge victory for users with motor disabilities.

Today, we are discussing new accessibility features that Apple has added, particularly those that help the deaf community and hearing impaired users worldwide.

Before iOS 14, during a FaceTime group call, when a caller speaks, your tile would expand in size, increasing its importance. This is useful when someone says something, the focus would be on them on everyone’s devices, especially since Group FaceTime supports up to 32 people. But … you can see how this goes wrong for users who use sign language to communicate on FaceTime calls. The prominence of the automatic speaker is not a deaf friendly characteristic.

Deaf and hard-of-hearing users have been using FaceTime or any video calling service for a long time, allowing them to connect with others in a way that didn’t exist just a few decades ago. Now they can pick up their phone or laptop and start talking. You can see how important FaceTime is to many people, so this new feature would positively impact many users of Apple products.

In iOS 14, users starting to sign will now make their tile bigger, grabbing attention during a FaceTime group call.

This new feature is really cool. It does exactly what it says:

“Your iPhone will continuously hear certain sounds and, using the device’s intelligence, notify you when sounds can be recognized.”

Even while respecting the user’s privacy through analysis on the device, listen to environmental sounds such as fire alarms, sirens, smoke detectors, cats, dogs, appliance bells, car horns, bells, knocks on doors, running water, crying of babies and screams of people. It will send a notification that users can temporarily disable notifications for that specific sound for 5 minutes, 30 minutes, or 2 hours.

It is also important to note that Apple says: “Sound recognition should not be relied upon in circumstances where it may be damaged or injured, in high-risk or emergency situations, or for navigation.”

“This new accessibility feature is designed to amplify soft sounds and adjust certain frequencies for an individual’s hearing, to help make music, movies, phone calls and podcasts sound clearer and clearer.”

There’s also this cool custom audio setup where you play two sub-frequency boost audio samples and you choose which version sounds best. Helps you adjust the Headphone Housing settings.

During the presentation, Apple mentioned that it also works with the Transparency mode in AirPods Pro, which will make “silent voices more audible and tune the sounds in your environment to your hearing needs.”

Headphone housing is available on Apple and Beats headphones with the H1 headphone chip, such as 2nd generation AirPods, AirPods Pro, Powerbeats Pro and more, as well as EarPods.

There are more accessibility features in iOS 14 that are not specifically targeted at users with hearing loss. One of my favorites is Back Tap, where you double-tap or triple-tap the back of your iPhone to perform an action like locking the screen, invoking Spotlight, or starting a shortcut. I like mine to open the camera when I touch my iPhone three times.

You can learn more about the feature set in the rest of iOS 14 and everything else they announced at WWDC this year in Apple’s Newsroom.

We will love iOS 14 when it comes out later this year and I’m sure Apple worked this year harder than ever, working and refining the user experience year after year.

Thank you for reading!